AI Resurrects Parkland Victim: Gun Control Interview Ignites Outrage

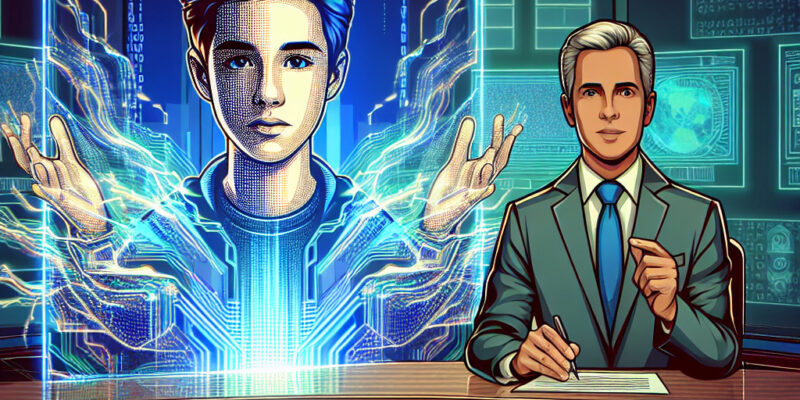

In recent days, a deeply emotive and controversial digital event has caught the attention of many observers. It involves the tragic story of Joaquin Oliver, a victim of the 2018 Parkland school shooting, and the startling use of artificial intelligence to create a virtual representation of him. This AI-generated avatar was featured on an episode of Jim Acosta’s show, sparking a conversation that probes both the power and the potential pitfalls of using technology in such a poignant and sensitive context.

Joaquin Oliver was one of the 17 individuals whose life was tragically cut short in the mass shooting at Marjory Stoneman Douglas High School in Parkland, Florida. The aftermath of the tragedy has seen a sustained call for action on gun control, often supported by survivors and the families of victims. Now, the issue has taken on an additional dimension with the appearance of Joaquin’s digital likeness, created to advocate for stricter gun legislation.

This unusual use of technology raises profound ethical questions. On one hand, there is potential to amplify essential messages, like those against gun violence, with unprecedented emotional impact. Joaquin’s avatar, addressing audiences directly, can evoke a powerful connection and raise awareness in ways that traditional advocacy might not. However, on the other hand, this approach treads on sensitive ground. It invokes the question of consent, as Joaquin cannot express how he feels about his likeness being used in this way. While his family may have consented, others argue about the broader implications of representing deceased individuals through AI without their explicit prior approval.

The episode featuring Joaquin’s avatar has indeed been a catalyst for public debate. Some viewers expressed support, perceiving it as a bold and innovative method to keep the conversation around gun control alive. In contrast, others felt uncomfortable, questioning the morality of resurrecting someone digitally for advocacy purposes. This reaction is understandable; our society is still grappling with the definition of respectful boundaries when it comes to digital representations of the deceased.

The intersection of technology and advocacy has always been complex. AI technology, especially, contributes to this complexity with its capacity to blur lines between past and present, reality and virtual reality. Advocates for gun reform may view this as a necessary evolution of activism, a tool to generate empathy and drive change. Detractors, however, may see it as an exploitation of tragedy, potentially overshadowing the very causes it aims to promote.

There’s also the question of the longevity and impact of such digital campaigns. Can an emotionally charged AI avatar create lasting change or will it only momentarily stir emotions without leading to substantial shifts in policy? History shows that while technology can augment advocacy efforts, lasting change often depends on continuous action and dialogue.

As technology continues to evolve, society will need to remain vigilant about how these tools are employed, especially when dealing with sensitive topics. As Joaquin’s digital presence illustrates, technology can serve as a double-edged sword, offering new avenues for expression and remembrance, while simultaneously challenging our ethical limits and cultural norms.

Reflecting on this, it’s clear that the conversation surrounding AI, ethics, and advocacy is far from over. The use of Joaquin Oliver’s AV likeness is emblematic of the broader questions our society must address as technology becomes more entangled with our daily lives and values. How we navigate these waters will shape the future of both technology and activism. As individuals and communities, we must engage with these conversations actively, navigating the fine line between honoring memories and respecting the living and the deceased.